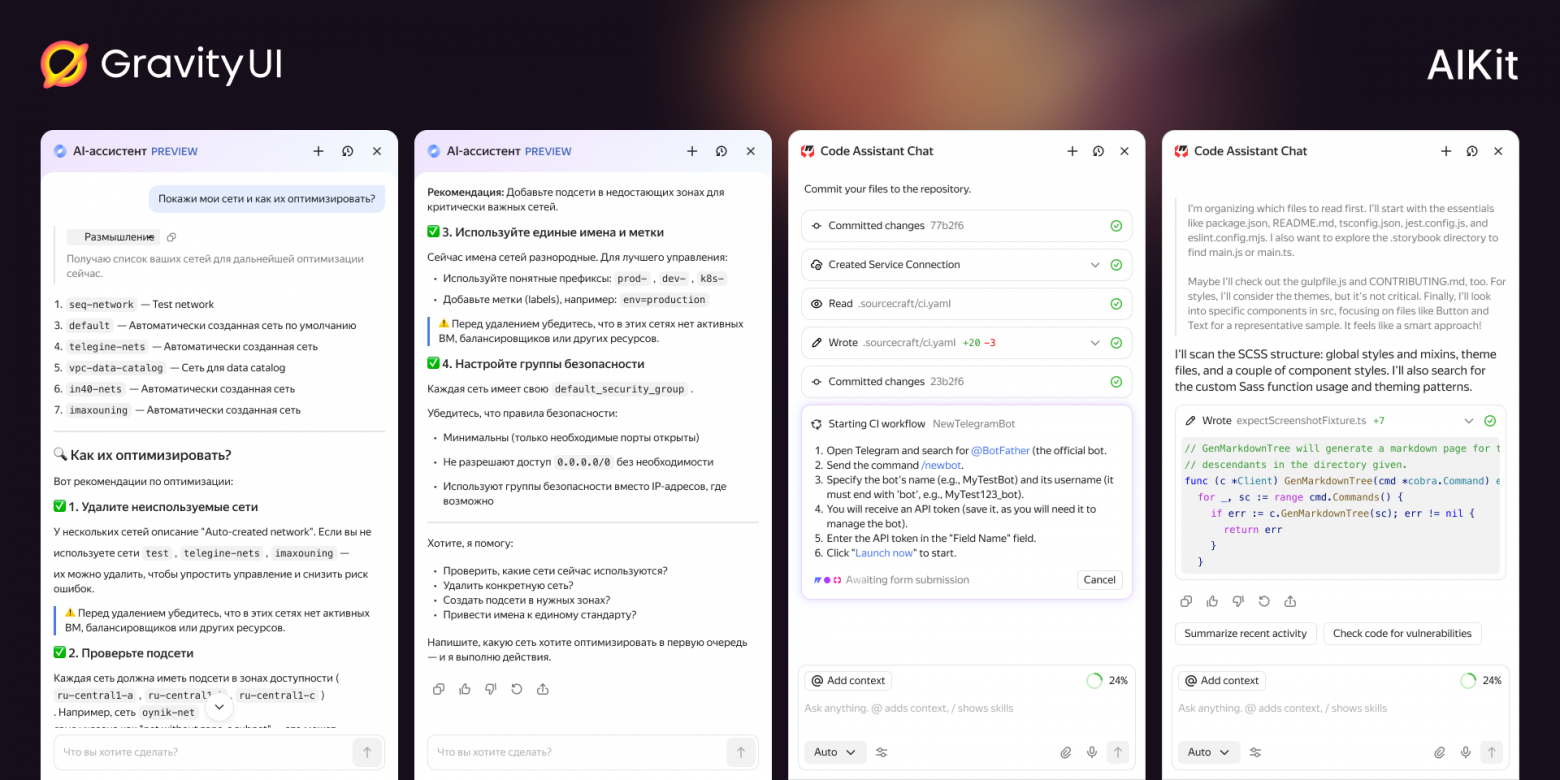

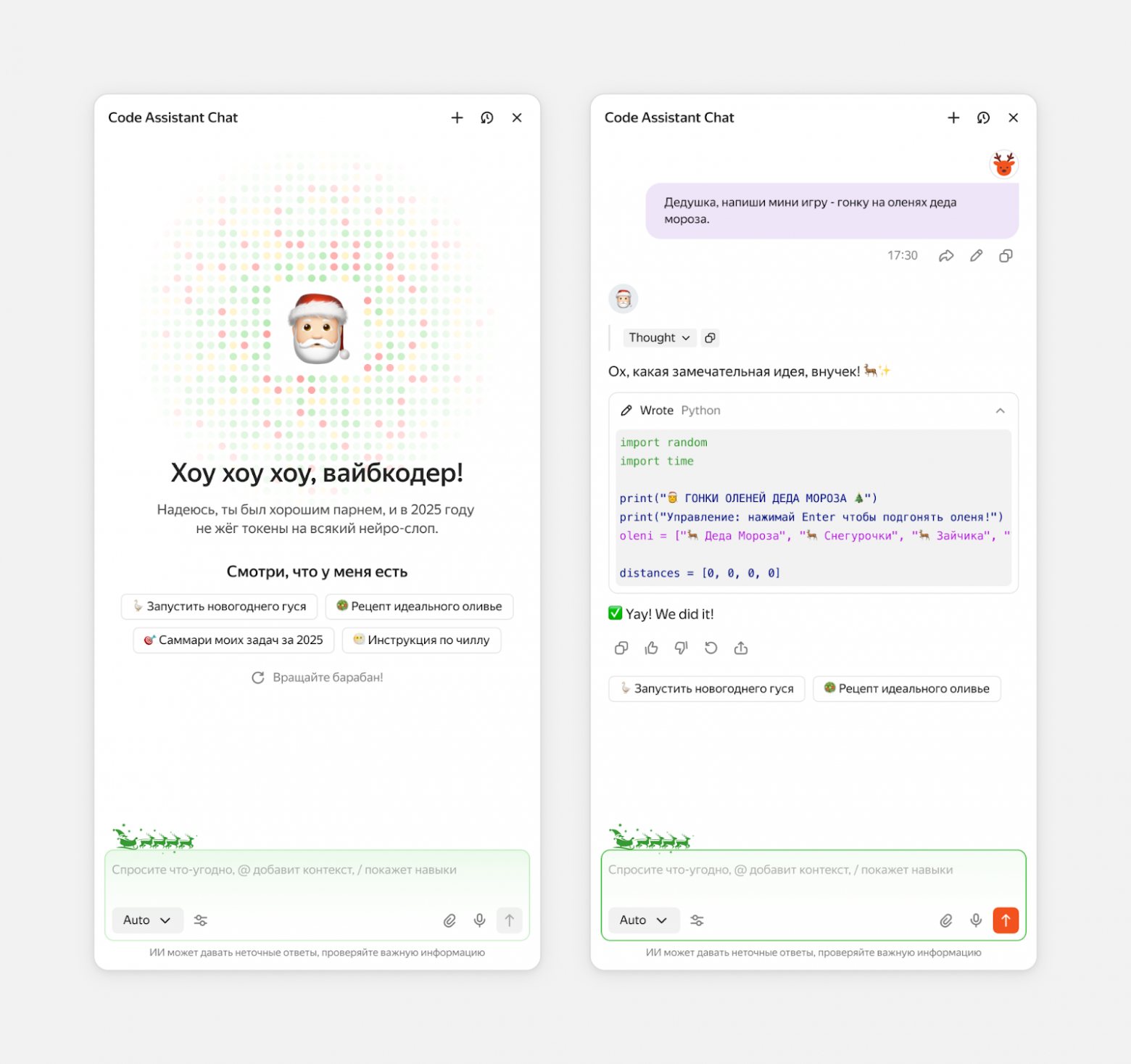

Examples of chats built with AIKit in the light theme

One Chat to Rule Them All: We Built an AI Assistant Library Based on Gravity UI

One Chat to Rule Them All: We Built an AI Assistant Library Based on Gravity UI

An article about the launch of the AIKit library: what we focused on during development, why it’s needed, and how to use it in your own projects.

Over the past year, we’ve seen a boom in AI assistants, and it didn’t bypass Yandex Cloud interfaces: sometimes a support chatbot with a model would appear, sometimes an agent for operational tasks would show up in the console. Teams connected models, designed dialog logic, created UI, and assembled chats — each on their own.

Different teams built interfaces on the shared Gravity UI framework, but over time so many variations appeared that it became hard to maintain a consistent user experience. And colleagues increasingly ran into the fact that they were spending time on the same solutions again and again.

To stop reinventing the wheel every time, we consolidated our accumulated practices into a single approach and built a tool for AI chatbots — @gravity‑ui/aikit. It lets you create a full-fledged assistant interface in a few days and still easily adapt it to different scenarios.

My name is Ilya Lomtev, I’m a Senior Developer on the Foundation Services team at Yandex Cloud. In this article, I’ll explain why we decided to build AIKit, how it works, share a bit about our future plans — and what you can try on your side.

How and why we built AIKit

Over the past year, the number of services with AI assistants in Yandex Cloud has grown, for example:

-

Code Assistant Chat in SourceCraft — the assistant helps developers write code, and in AI agent mode it creates and configures repositories, runs CI/CD processes, answers documentation questions, and automates tasks. It can also manage issues and pull requests, and work with code: explain it, create files, and edit files.

-

An AI assistant in the cloud console — an assistant designed to manage resources in Yandex Cloud. Its main goal is to help configure, change, and manage cloud infrastructure quickly and safely, hiding the complexity of interacting with APIs and tools.

A dozen chats emerged in the ecosystem, each with its own logic, message format, and set of corner cases.

We found that teams ended up with roughly the same set of tasks. What most of them needed:

-

neatly render user and assistant messages,

-

properly organize response streaming,

-

show an “assistant is typing” indicator,

-

handle errors like dropped connections or retries.

The tasks are essentially the same, but there are many ways to solve them — and the UX differs. For example, chat history placement and display: it can be a separate screen that opens like a menu, or a list of chats in a popup.

A problem became apparent: the experience across different chats varied significantly. In some places the assistant streamed the response, and in others it displayed a ready-made text at once. In one interface messages were grouped, while in another they were shown as one continuous feed. This broke the overall UX — users move between products within the same ecosystem, but the assistant feels completely different.

It also became noticeable that rolling out new model features was getting harder and harder. To communicate capabilities like tool use, multimodality, or structured tool outputs to users, we had to align the contract, update backends, and then update the UI in each team separately. In such conditions, any changes took a lot of time and didn’t scale well.

We wanted to stop this growth in variability and bring back predictability. To do that, we needed to unify the data model and working patterns, provide ready-made components and hooks so teams wouldn’t have to start from scratch, and still leave room for customization — because everyone’s scenarios are different.

That’s how we arrived at the idea of a standalone library, @gravity‑ui/aikit — an extension of Gravity UI that follows the same principles but is focused on modern AI scenarios: dialogs, assistants, multimodality.

AIKit architecture: what we built on

When designing AIKit, we leaned on the experience of AI SDK and several fundamental principles.

Atomic Design at the core: the whole library is built from atoms to pages. This structure provides a clear hierarchy, enables component reuse, and, when needed, lets you change behavior at any level.

Completely SDK-agnostic: AIKit doesn’t depend on any specific AI provider. You can use OpenAI, Alice AI LLM, or your own backend — the UI receives data through props, while state and requests remain on the product side.

Two levels of usage for complex scenarios: there is a ready-made component that works out of the box, and there is a hook with logic that lets you fully control the UI. For example, you can use PromptInput or build your own input based on usePromptInput. This gives flexibility without having to rewrite the foundation.

An extensible type system. To ensure consistency and type safety, we built an extensible data model. Messages are represented by a single typed structure: there are user messages, assistant messages, and several base content types — text (text), model reasoning (thinking), tools (tool). At the same time, you can add your own types via MessageRendererRegistry.

Everything is typed in TypeScript, which helps build complex scenarios faster and avoid mistakes during development.

// 1. Define the data type

type ChartMessageContent = TMessageContent<

'chart',

{

chartData: number[];

chartType: 'bar' | 'line';

}

>;

// 2. Create the renderer component

const ChartRenderer = ({part}: MessageContentComponentProps<ChartMessageContent>) => {

return <div>Chart visualization: {part.data.chartType}</div>;

};

// 3. Register the renderer

const customRegistry = registerMessageRenderer(createMessageRendererRegistry(), 'chart', {

component: ChartRenderer,

});

// 4. Use it in AssistantMessage

<AssistantMessage message={message} messageRendererRegistry={customRegistry} />;

Finally, we provided theming via CSS variables, added i18n (RU/EN), ensured accessibility (ARIA, keyboard navigation), and set up visual regression tests using Playwright Component Testing in Docker — and the library was ready for production use.

Under the hood

At the core of AIKit is a unified dialog model. To create it, we first had to figure out the message hierarchy.

Messages themselves are fairly multifaceted entities. There’s the first message from the LLM — that’s one stream. But within it there can be many different nested messages: essentially reasoning, suggestions, tool calls to solve a single question. All these different sub-messages are, in fact, one message from the backend. But each of them can also be a separate message in a simple LLM usage.

That’s why we kept the option to use the chat in both ways: messages can be nested within each other, or they can be flat — it all depends on your needs.

State management remains on the service side. AIKit doesn’t store data itself — it receives it from outside. Teams can use React State, Redux, Zustand, Reatom — whatever is convenient. We only provide hooks that encapsulate typical UI logic, for example:

-

smart scrolling with

useSmartScroll; -

working with dates, e.g., locale-aware date formatting via

useDateFormatter; -

handling tool messages via

useToolMessage; -

and everything else you need to build a dialog.

On top of that, AIKit remains extensible. You can connect any models, create your own content types, and build the UI entirely for your tasks — leveraging logic from hooks or using ready-made components as a base. The architecture allows experimentation without breaking shared principles.

How to build your own chat

To create your first chat, we’ll use the prepared ChatContainer component:

import React, { useState } from 'react';

import { ChatContainer } from 'aikit';

import type { ChatType, MessageType } from 'aikit';

function App() {

const [messages, setMessages] = useState<MessageType[]>([]);

const [chats, setChats] = useState<ChatType[]>([]);

const [activeChat, setActiveChat] = useState<ChatType | null>(null);

const handleSendMessage = async (content: string) => {

// Your message sending logic

const response = await fetch('/api/chat', {

method: 'POST',

body: JSON.stringify({ message: content })

});

const data = await response.json();

// Update state

setMessages(prev => [...prev, data]);

};

return (

<ChatContainer

messages={[]}

onSendMessage={() => {}}

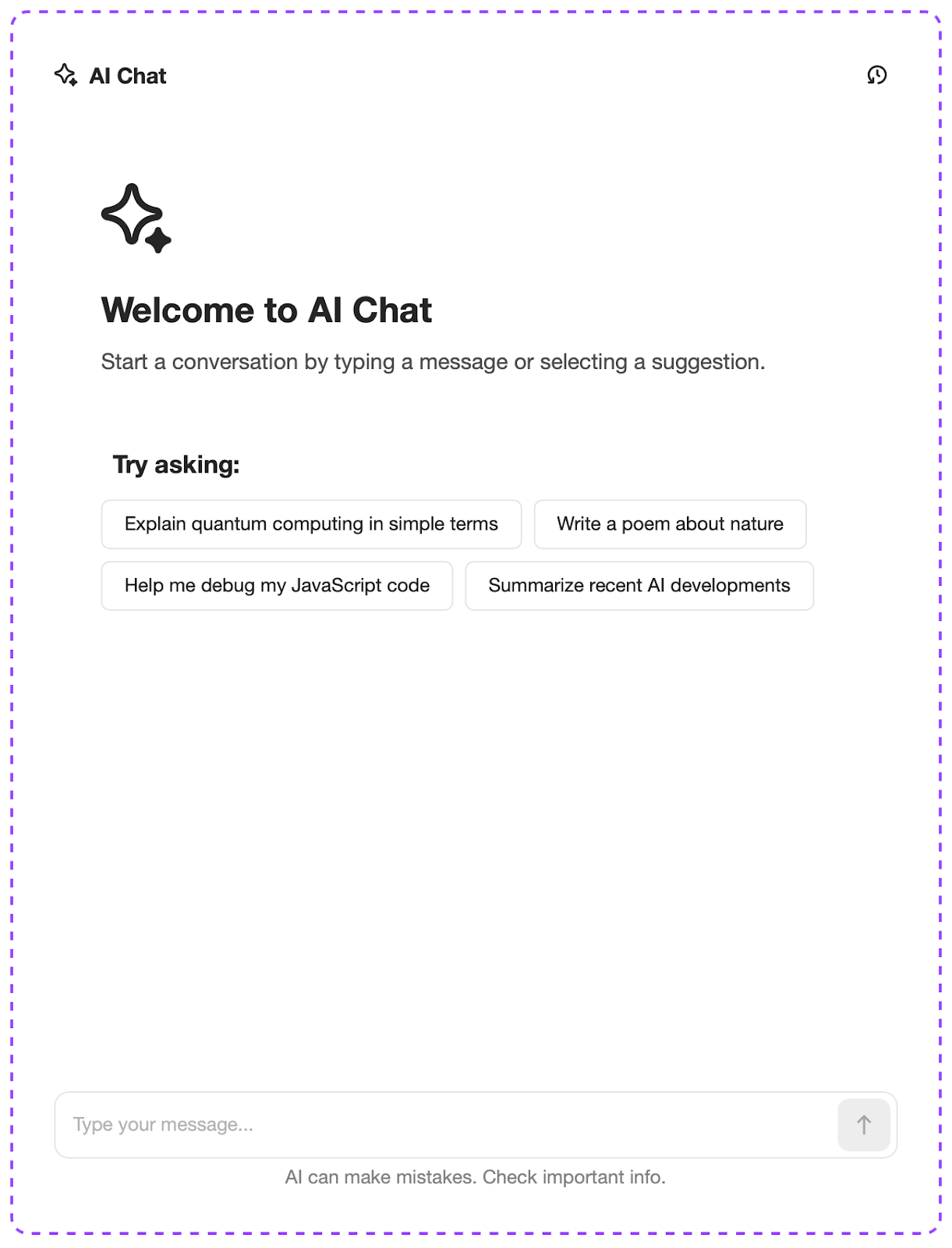

welcomeConfig={{

description: 'Start a conversation by typing a message or selecting a suggestion.',

image: <Icon data={() => {}} size={48}/>,

suggestionTitle: 'Try asking:',

suggestions: [

{

id: '1',

title: 'Explain quantum computing in simple terms'

},

{

id: '2',

title: 'Write a poem about nature'

},

{

id: '3',

title: 'Help me debug my JavaScript code'

},

{

id: '4',

title: 'Summarize recent AI developments'

}

],

title: 'Welcome to AI Chat'

}}

/>

);

}

Out of the box, it looks like this:

Let’s add a bit of holiday spirit:

-

Fix the initial state.

For finer tuning, we’ll assemble the chat from separate components:

Header,MessageList,PromptBox.import { Header, MessageList, PromptBox } from 'aikit'; function CustomChat() { return ( <div className="custom-chat"> <Header title="AI Assistant" onNewChat={() => {}} /> <MessageList messages={messages} showTimestamp /> <PromptBox onSend={handleSend} placeholder="Ask anything..." /> </div> ); } -

Apply different built-in message types imported via

MessageType.-

thinking— will show the AI’s reasoning process (so users can explore the logic the assistant uses to prepare an answer). -

tool— works well for rendering interactive response blocks; in our case, it’s a code block with proper syntax highlighting and supported editing and clipboard copy operations.

You can also add your own types, for example, image messages:

type ImageMessage = BaseMessage<ImageMessageData> & { type: 'image' }; const ImageMessageView = ({ message }: { message: ImageMessage }) => ( <div> <img src={message.data.imageUrl} /> {message.data.caption && <p>{message.data.caption}</p>} </div> ); const customTypes: MessageTypeRegistry = { image: { component: ImageMessageView, validator: (msg) => msg.type === 'image' } }; <ChatContainer messages={messages} messageTypeRegistry={customTypes} /> -

-

Add styling via CSS…

…and we’ll get a chat with Ded Moroz (Santa Claus):)

For full customization of individual elements, you can use hooks — we’d love to see your styling variations in the comments under the article!

How AIKit impacted services

The result of using AIKit in Yandex Cloud became noticeable quickly. In all services, assistants started behaving the same way: streaming responses the same way, showing errors the same way, grouping messages the same way. UX became consistent; now it’s easier to interact with it across the entire ecosystem, and the behavior is more expected and predictable.

-

A unified UX language — assistant chats in different products now feel like part of one ecosystem. Users see predictable behavior: the same streaming, error handling, and interaction patterns.

-

Much faster chat UI development.

-

Centralized evolution — new features like the thinking content type or improved tool handling are added once and automatically become available to everyone.

-

The library became the foundation for shaping AI interface standards in the ecosystem.

What’s next

Now for the plans. We’ve identified several directions:

-

Performance improvements via virtualization for working with very large chat histories.

-

Expanding the core scenarios for new AI agent capabilities, which are actively developing.

-

Adding utilities to simplify mapping data from popular AI models into our chat data model.

Additionally, we’ll continue improving the documentation and examples. And of course, community growth — we want the library to be useful not only inside the company, but also for external developers.

How to try AIKit

Go to the library section on our website. If you’re building your own AI assistant, want a fast and predictable chat UI, and already use Gravity UI (or are ready to try it), take a look at the README and examples. We’d also appreciate feedback — open an issue, send a PR, and tell us what else you need for your scenarios!

If you like our project, we’d appreciate a ⭐️ on AIKit and UIKit!

Ilya Lomtev

Frontend Developer